|

Improving the Scaling Laws of Synthetic Data with Deliberate Practice

Reyhane Askari-Hemmat*, Mohammad Pezeshki*, Elvis Dohmatob, Florian Bordes, Pietro Astolfi, Melissa Hall, Jakob Verbeek, Michal Drozdzal, Adriana Romero-Soriano

|

|

EvalGIM: A library for Evaluating Generative Image Models

Melissa Hall, Oscar Mañas, Reyhane Askari-Hemmat, Mark Ibrahim, Candace Ross, Pietro Astolfi, Tariq Berrada Ifriqi, Marton Havasi, Yohann Benchetrit, Matthew Muckley, Karen Ullrich, Mike Rabbat, Brian Karrer, Michal Drozdzal, Jakob Verbeek, Adriana Romero-Soriano

|

|

Multi-Modal Language Models as Text-to-Image Model Evaluators

Jiahui Chen, Candace Ross, Reyhane Askari-Hemmat, Koustuv Sinha, Melissa Hall, Michal Drozdzal, Adriana Romero-Soriano[under review]

|

|

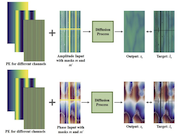

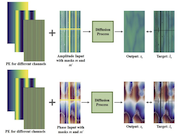

On Improved Conditioning Mechanisms and Pre-training Strategies for Diffusion Models

Tariq Berrada, Pietro Astolfi, Marton Havasi, Matthew J. Muckley, Melissa Hall, Reyhane Askari-Hemmat, Yohann Benchetrit, Karteek Alahari, Michal Drozdzal, Adriana Romero-Soriano, Jakob Verbeek, Neurips 2024

|

|

Diffusion-Based In-painting of Corrupted Spectrogram

Mahsa Massoud, Reyhane Askari-Hemmat, Adrian Liu, Siamak Ravanbakhsh, Neurips 2024 workshop on ML for Physical Sciences

|

|

An Introduction to Vision-Language Modeling

F. Bordes, R. Pang, A. Ajay, A. Li, A. Bardes, S. Petryk, O. Mañas, Z. Lin, A. Mahmoud, B. Jayaraman, M. Ibrahim, M. Hall, Y. Xiong, J. Lebensold, C. Ross, S. Jayakumar, C. Guo, D. Bouchacourt, H. Al-Tahan, K. Padthe, V. Sharma, H. Xu, X. Ellen Tan, M. Richards, S. Lavoie, P. Astolfi, R. Askari-Hemmat, J. Chen, K. Tirumala, R. Assouel, M. Moayeri, A. Talattof, K. Chaudhuri, Z. Liu, X. Chen, Q. Garrido, K. Ullrich, A. Agrawal, K. Saenko, A. Celikyilmaz, V. Chandra

|

|

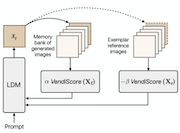

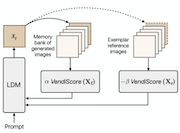

Improving Geo-diversity of Generated Images with Contextualized Vendi Score

Reyhane Askari Hemmat*, Melissa Hall*, Alicia Sun, Candace Ross, Michal Drozdzal, Adriana Romero Soriano ECCV 2024

|

|

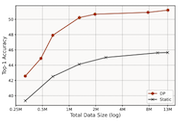

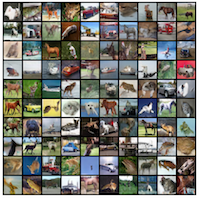

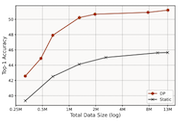

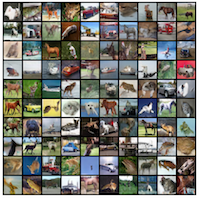

Feedback-guided Data Synthesis for Imbalanced Classification

Reyhane Askari Hemmat, Mohammad Pezeshki, Florian Bordes, Michal Drozdzal, Adriana Romero-Soriano TMLR 2024

|

|

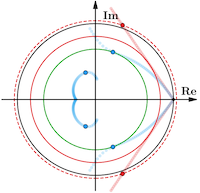

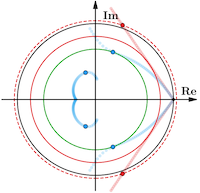

LEAD: Least-Action Dynamics for Min-Max Optimization

Reyhane Askari Hemmat*, Amartya Mitra*, Guillaume Lajoie, Ioannis Mitliagkas, TMLR 2023 featured paper (oral equivalent - Presented at ICLR 2024)

|

|

Negative Momentum for Improved Game Dynamics

Gauthier Gidel*, Reyhane Askari Hemmat*, Mohammad Pezeshki, Gabriel Huang, Remi Lepriol, Simon Lacoste-Julien, Ioannis Mitliagkas

|

|

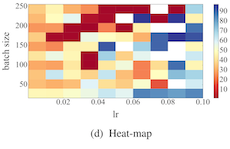

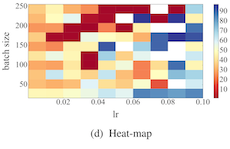

Oríon: Experiment Version Control for Efficient Hyperparameter Optimization

Christos Tsirigotis, Xavier Bouthillier, François Corneau-Tremblay, Peter Henderson, Reyhane Askari Hemmat, Samuel Lavoie-Marchildon, Tristan Deleu, Dendi Suhubdy, Michael Noukhovitch, Frédéric Bastien, Pascal Lamblin, AutoML workshop at ICML

|

|

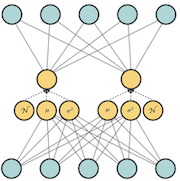

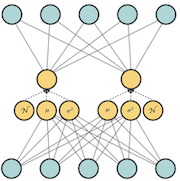

Auto Encoders in PyTorch

A quick implementation of Auto Encoder, Denoising Auto Encoders and Variational Auto Encoders in PyTorch.

|

|